Announcing Dataans v.0.3.0

Pavlo Myroniuk August 03, 2025 #rust #tool #project #tauri #leptos #dataansGitHub/TheBestTvarynka/Dataans/releases/v.0.3.0.

Intro

The Dataans app has adopted a local-first approach since the very beginning of its existence. After some time, I started to use the app on many devices and inside my Windows/Linux VMs. I caught myself thinking that it would be good to be able to transfer data from one device to another. Fast forward two months, and suddenly I realized that it is a critical feature for me. After some considerations and small research, I understood that I wanted the multi-device synchronization feature.

Decentralized Internet, P2P networks, etc, are good. But the current implementation of the data synchronization feature relies on the central sync (backup) server. The data synchronization using the P2P communication may be implemented in the future. It depends on my needs.

This post turned out to be quite large. Alternatively, you can read the shorter version: github/TheBestTvarynka/Dataans/6c898a01/doc/sync_server.md.

Demo

Before explaining how it works and how I implemented it, I want to show you the demo.

Better to see something once than hear about it a thousand times.

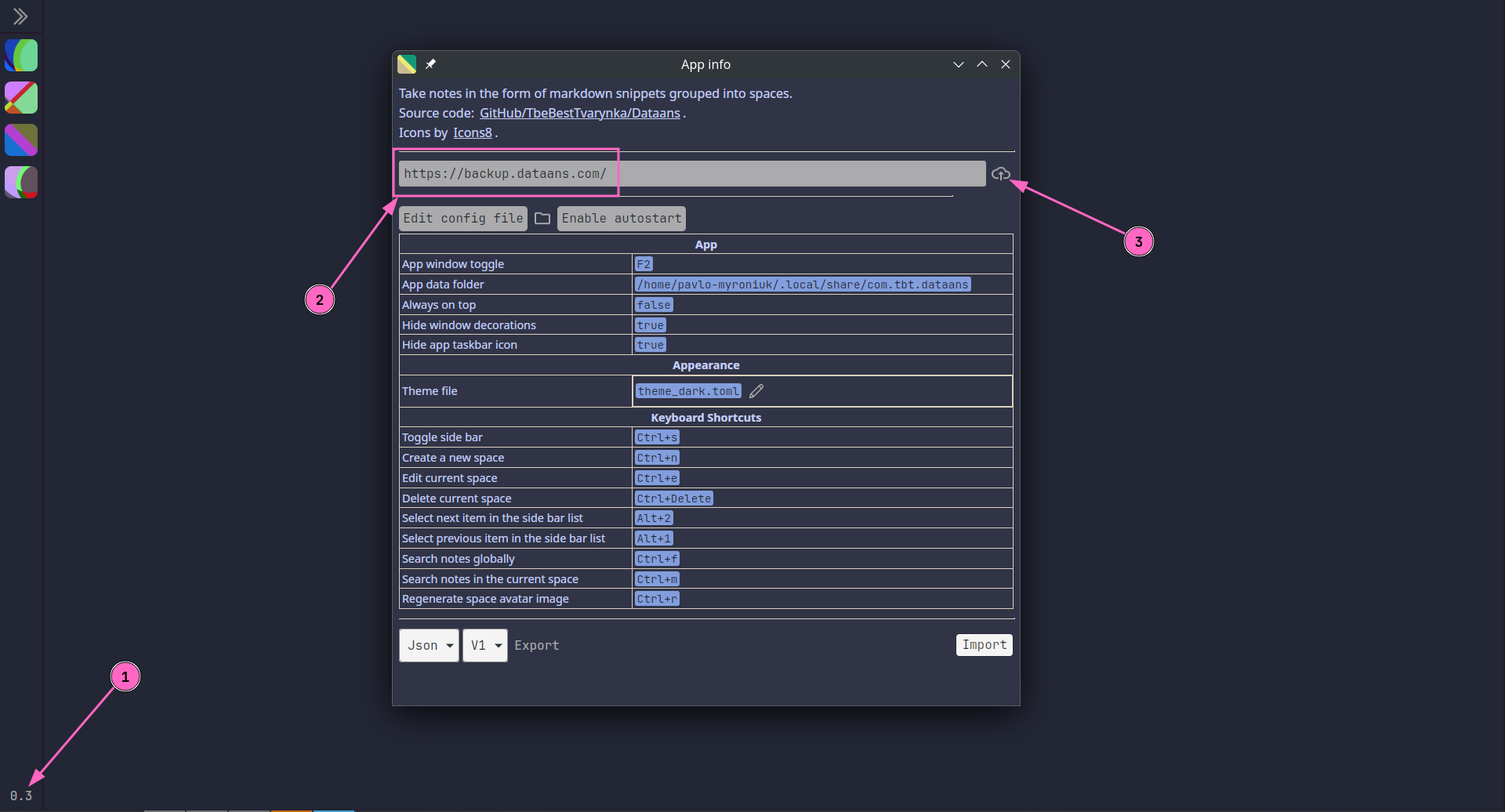

So, first, the user needs to log in. Open the App-info window by clicking on the app version number in the bottom left corner of the main window. Then, type the sync server address and click log in:

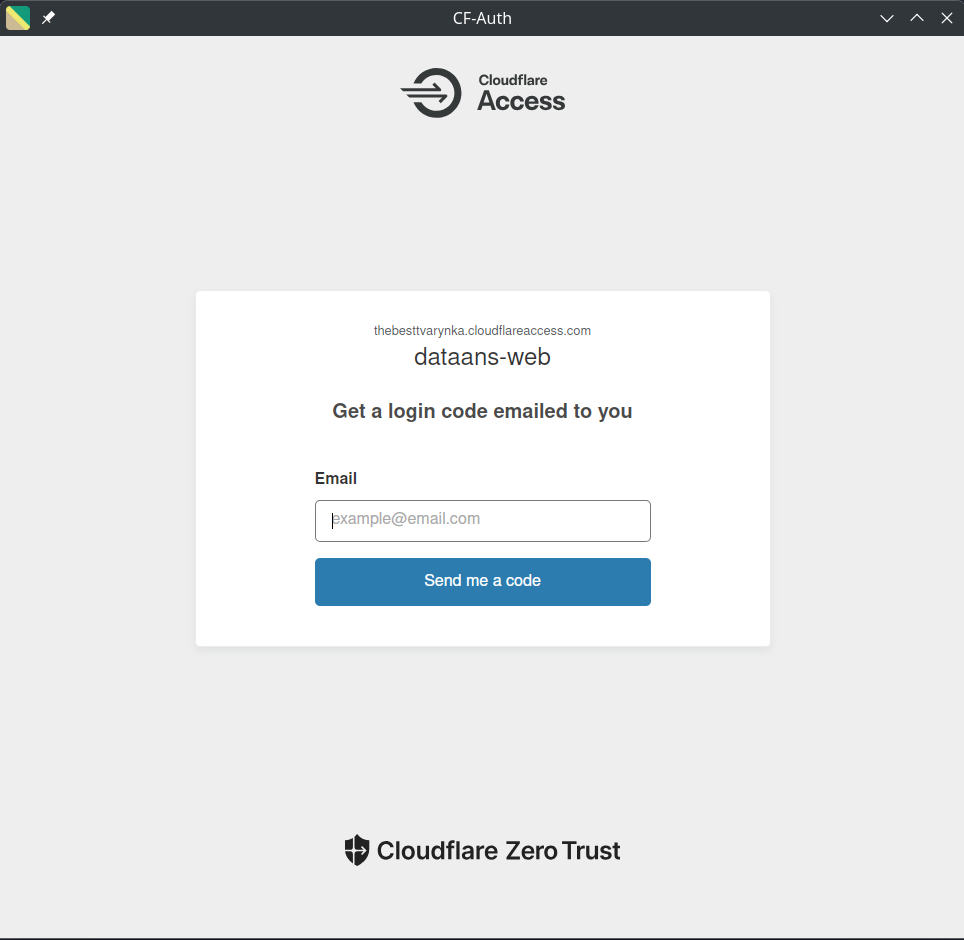

Now the user needs to authorize:

And yes, as you can see, I decided to delegate all authentication-related tasks to Cloudflare. I did not want to implement it manually, and, honestly, it was one of the best decisions I made. Thanks to Cloudflare Zero Trust Access, I only need to enter email and the code from email.

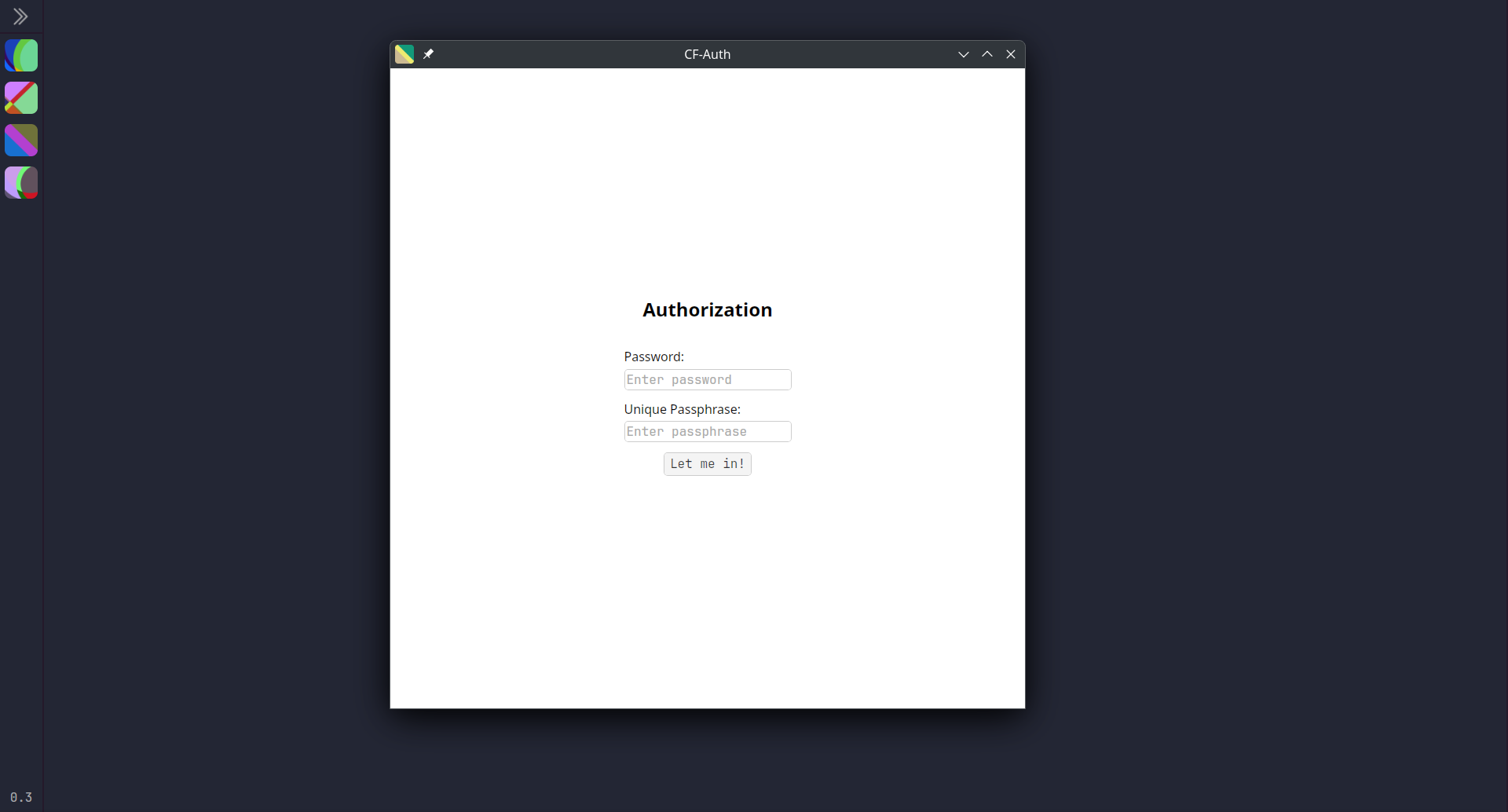

After the successful sign in, the user needs to enter the password and secret passphrase.

At this point, two cases are possible:

- If it is the first sign-in among all users' devices (sync server's database is empty), then the user needs to fill in ONLY the password input. The passphrase will be automatically generated by the app. But if the user wants to use a custom passphrase, then they can type it.

- If it is a new sign-in on another device and the sync server's database is not empty (there was a sync from other devices), then the user must type both password AND passphrase.

The user can read the passphrase on any signed-in device in the

profile.json.

Why does the app require the user to have a password if all auth has been delegated to Cloudflare?

It is explained in detail in the Auth section. But, in short, the data encryption key is derived from the user's password and passphrase.

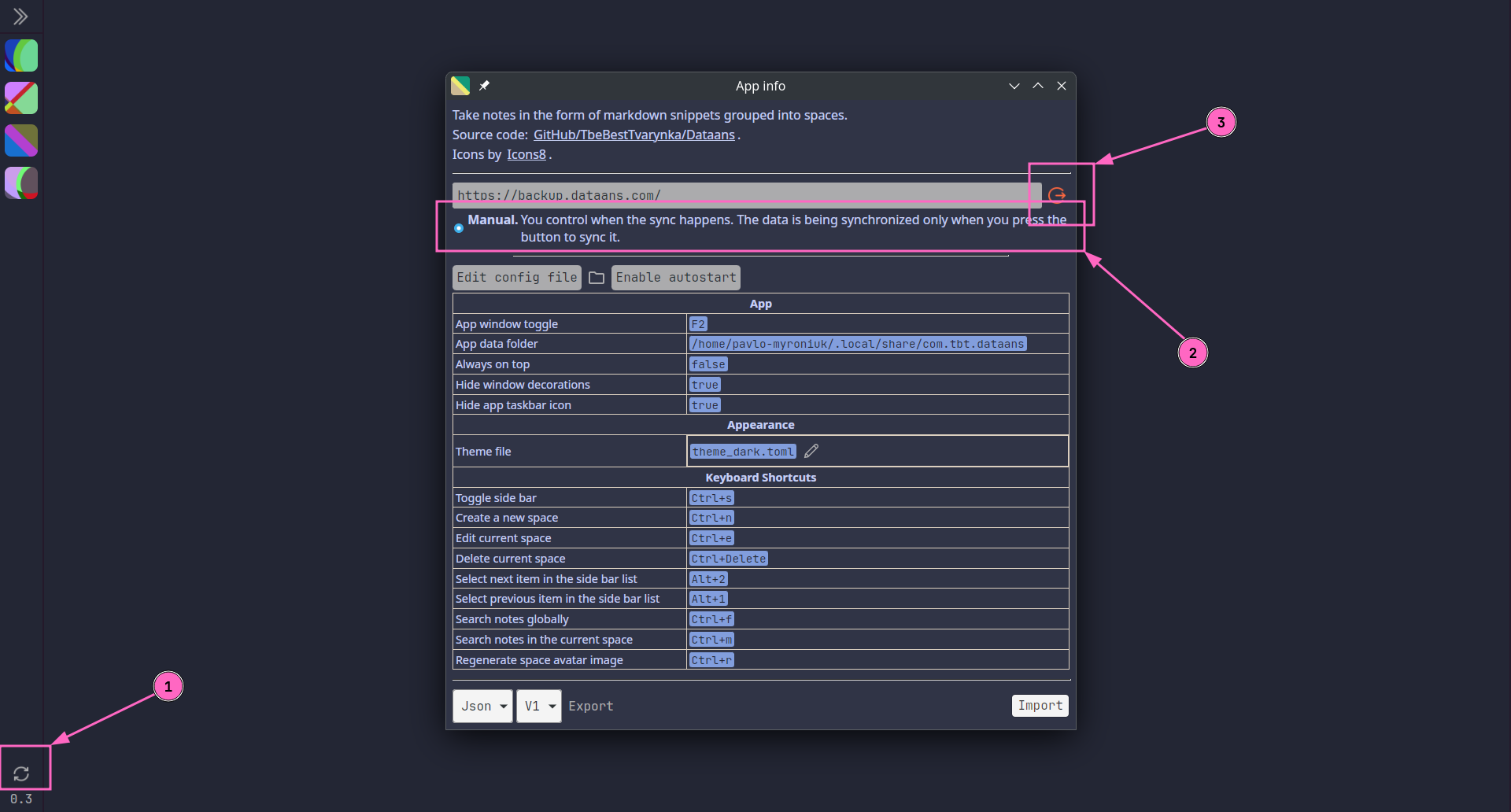

This is what the user sees after successful sign-in:

From the screenshot above:

- Button that triggers data synchronization.

- Synchronization mode. Currently, only manual mode is implemented. It means that whenever the user wants to sync the data, they need to press the button 🙃.

- Sign out button.

Now, the user is able to sync app data. The synchronization process happens in the background. So, the user can freely use the app without any limitations during synchronization. Once the sync is complete, a small pop-up message will appear.

How it works

Now let's talk about interesting things: how it works 😊. Below are detailed technical explanations. You can jump right to the interesting part. There is no need to read it in order.

Infrastructure

This section is boring, because I don't like to describe infrastructure in detail. So, if you are not interested in such things, then skip it 😉.

The overall infrastructure is straightforward.

I use Cloudflare for endpoint protection, auth, DDoS protection, and domain hosting.

I use Fly.io as a main cloud provider. I like the microVM approach that they use. It is my first app deployed on fly.io. The deployment process was simple and fast. It reminded me of the old, almost forgotten feeling "it just works".

I use Neon.com PostgreSQL provider to store user data. I did not like fly.io's Postgres integration because it does not shut down automatically when it is not in use. So, I decided to try Neon. They have a very limited free tier, but it is ok for me (at least for now). I am very satisfied with the database start time. I think it is a great choice for small personal projects.

I use Tigris object storage to store user files. Mainly because it is integrated into the fly.io, so I decided to use it. Its free tier is more than enough for me.

Auth

Initially, I implemented the authentication manually. It was a simple password-based authentication with a primitive session-based authorization implementation.

However, I then decided to throw it out. I pondered on it and decided that I do not want to maintain a ton of code dedicated to auth. I wanted to delegate it to some external service. I explored the most popular solutions and chose Cloudflare Zero Trust Access.

It works very simply (as I wanted). Cloudflare works as a proxy and intercepts requests to my web service. If the request does not have an authentication token inside, then Cloudflare does the auth. I configured the allowed emails list, and Cloudflare sends a code to the entered email during sign-in. Cloudflare injects a token into the request after successful sign-in and sends the request to my web server.

The only thing I need to do on my web server's side is to validate Cloudflare's JWT token. The validation algorithm is pretty straightforward:

- Extract the

cf-access-jwt-assertionheader. - Get Cloudflare's signing keys.

- Validate JWT using any common library.

You can read the full code here: github/TheBestTvarynka/Dataans/6c898a01/crates/web-server/src/routes/mod.rs. There is an important moment:

By default, Access rotates the signing key every 6 weeks. This means you will need to programmatically or manually update your keys as they rotate. (src)

Therefore, if you decide to hardcode signing keys, ensure that you rotate them regularly. I decided to follow another path: the web server requests the signing keys every time it needs to verify the JWT token. Yes, it is not the best option, but it is enough for me (Worse Is Better).

Great. Simple, secure, easy to set up 🚀. I asked ChatGPT for the step-by-step guide, which worked from the first try. I tried to access the protected route from the browser, and it worked perfectly.

However, there is a small, tiny problem: I am not writing a website, I am writing a desktop app using Tauri.

I cannot rely on cookies themselves because I want to make requests from the app backend using the request HTTP client.

Therefore, I need to extract the CF_Authorization token somehow and save it somewhere in the app backend.

Additionally, the app needs to ask the user password and passphrase. I was thinking for some time about the easiest way to implement.

I came up with the idea of sending the cookie token, password, and passphrase in one Tauri command to the app backend.

When the user passes the Cloudflare, the web server responds with the authorize.html page.

It is a simple form with an embedded script. When the user submits the form, the script extracts the CF_Authorization token, reads the typed password and passphrase, and sends all this data to the app backend:

Fortunately, Tauri injects the window.__TAURI__ object into every spawned webview. Thus, even when the webview is created from an external URL (not the direct app frontend), it can still send Tauri commands.

That's all. The rest is easy to implement. When the app backend receives the data, it generates an encryption key (from the password and passphrase), tests the auth token, and saves it... There is a corresponding piece of code, if you want to see it.

Data encryption

The local state is unencrypted and saved in a SQLite database. But the app encrypts the data before sending it to the sync server. Dataans follows one rule regarding user data: we trust the user's computer and the user is responsible for keeping the local state safe, but we do not trust any remote servers and always encrypt data before sending it.

We need to derive a key to encrypt the data. The app uses the PBKDF2 function and needs the user's password and passphrase to derive the key.

// Pseudocode

let password = sha256;

let salt = sha256;

let key = pbkdf2_hmac;

The derived key is stored in plaintext in the profile.json file. There is no need to hide this key, because all data is local and unencrypted.

As I wrote above, all data is encrypted before sending to the sync server. The app uses the AES256-GCM algorithm and appends an HMAC-SHA256 checksum.

// Pseudocode

let nonce = random;

let cipher_text = aes_gcm.encrypt;

let checksum = hmac_sha256;

let result = nonce + plaintext + checksum;

AES256-GCM provides strong encryption, and HMAC-SHA256 provides data integrity. The same for decryption, but in reverse:

// Pseudocode

let = result.split_at;

let = cipher.split_at;

let plaintext = aes_gcm.decrypt;

let calculated_checksum = hmac_sha256;

if checksum != calculated_checksum

Ok

That is all for the encryption part. The overall encryption process is simple, secure, and, most importantly, boring. I like the fact that it took a bit more than 100 lines of code to implement all the needed cryptography functions in Rust: github/TheBestTvarynka/Dataans/6c898a01/dataans/src-tauri/src/dataans/crypto.rs.

Synchronization

(If you do not want to read the whole section, then you can read the code: github/TheBestTvarynka/Dataans/6c898a01/dataans/src-tauri/src/dataans/sync/mod.rs. It is well-documented.

I spent around half of the year trying different approaches in synchronization. It was hard to choose a suitable sync algorithm. I had never implemented such features and had been overthinking it for months. First, I read the rsync algorithm documentation and tried to apply it. Then, I tried to store the hash of every object in the database and compare these hashes to determine the state diff. At some point, I went even further and tried to apply Merkle trees 🤪.

Everything looked overcomplicated. But I did not give up and decided to make things even more complicated: CRDTs. I was disappointed again because it wasn't what I needed.

At some point, I paid attention to the Operation-Based CRDTs. Synchronizing the operations log instead of the full app state looks simple. I elaborated this idea further and finally decided to use it for the synchronization.

Every time a user does any action that alters the local state, the app logs this action. It is called a user operation, or an operation. When the user starts the sync, the app and the sync server synchronize the operation lists. Now the task is a bit simpler: there are two lists and the app needs to synchronize their content.

The naive solution is to take local (app state) and remote (sync server state) operation lists and compare them operation by operation. But it can be slow, operations number can be large, and I still want to overengineer things. Thus, I introduced blocks and block hashes.

A block is a collection of N sequential operations sorted by timestamp.

// `operations` are sorted by timestamp.

let block_1 = operations;

let block_2 = operations;

// ...

let block_n = operations;

I am sure you got the idea. All operations are divided into chunks by N, which I call blocks. Nothing more, nothing less.

A block hash is a hash of sequential hashes of block operations.

let block_hash = hash;

This is the core of my small, simple optimization. During the first synchronization step, the app requests the server's block hashes. And calculates the same block hashes on local operations. Blocks with the same hashes contain the same operations. Thus, the app can safely discard such blocks.

Conflicts? The last write wins. Every object in the local database has an updated_at timestamp.

When the app detects conflict during synchronization, it compares two timestamps and last (latest) write wins.

Let's summarize the synchronization algorithm. I prepared a scheme for you to make it easier to understand:

Step 1. The app requests the server's block hashes and calculates local block hashes concurrently. The app will have two lists of hashes as the result.

Step 2. The app compares block hashes from two lists one by one. It stops on the first pair of unequal hashes (or until one of the lists ends) and discards all hashes before this pair. The app will have two lists of block hashes that have different hashes. It does not need to compare hashes after the first unequal pair. All operations are sorted by timestamp before calculating block hashes. So, all the next consecutive blocks will also have different hashes.

Step 3. At this point, the app knows how many blocks are equal on the local and remote sides. So, the app requests all operations after equal blocks and queries local operations (also after equal blocks).

Step 4. The app compares local and remote operation lists (both of which are sorted by timestamp). The best thing is that the app does not need to do a Cartesian product over these two lists. The app compared operations one by one until the pair of operations with different ids (or until one of the lists ends).

We can be sure that all operations after that point also have different ids. Because it is impossible otherwise. If the app is aware of some operation, then it is also aware of all operations before. The app cannot synchronize only later operations and skip earlier ones. The same is true for the server's side.

// Step 4: Find the difference between local and remote operations.

let mut operations_to_upload = Vecnew;

let mut operations_to_apply = Vecnew;

loop

The app will have operations_to_upload and operations_to_apply lists as the result.

Step 5. The last step is simple. The app uploads the operations_to_upload operations to the sync server and applies operations_to_apply operations on the local database.

If everything succeeds, we can be sure that local and remote states are the same ☺️.

Files sync

Aren't you forgetting something important? 🙃

Let me remind you. The Dataans supports attaching files to notes. Only file metadata is saved in the local SQLite database. The file contents are saved locally in the file system as files. It means that we also need to synchronize files alongside the SQLite DB state.

A registered file is the file that is recorded in the local SQLite database. Every file has an is_uploaded property.

The app iterates over all registered files in the local database. Based on this property and the file existence in FS, the app does one of the following:

is_uploadedistrueand the file exists in FS - then the app does nothing. Everything is good.is_uploadedistrueand the file does not exist in FS - then the app downloads the file from the sync server.is_uploadedisfalseand file exists in FS - then the app uploads the file and setsis_uploadedtotrue. The file is encrypted before uploading.is_uploadedisfalseand the file does exist in FS - then the app logs a problem. Ideally, such a situation should not even exist. If it happens, then it means that someone/something has modified the app data directory (or a bug, but come on, it is definitely something else 🙃).

Files synchronization task runs concurrently with the main synchronization task. The synchronization process ends when both tasks finish.

The app can discover new files during database sync. For example, when you add a new file on the first device, synchronize the data, and then try to sync the data on the second device.

When this happens, the main synchronization task notifies the files synchronization task that there is a new file to download. These two tasks communicate over the channel.

// https://github.com/TheBestTvarynka/Dataans/blob/6c898a01afc0942cb94b5dbc822349d8afa924ee/dataans/src-tauri/src/dataans/sync/mod.rs#L133-L138

let = ;

let main_sync_fut = synchronizer.synchronize;

let file_sync_fut = synchronizer.synchronize_files;

let = join!;

What is next?

I am happy I managed to implement it. I was a bit frustrated about it because the path to the final solution was very rough. Such an intuitive and simple feature turned out to be quite complex and hard to implement.

Currently, I have implemented most of the major app backend features I needed. I am continuing to develop the Dataans. But I will focus more on the app frontend features. I think the next major releases will contain a lot of UI and UX improvements. I have a long list of amazing features ✨.

If you are interested in contributing to or using the Dataans, feel free to ask any questions ☺️.